Watch a Black Hat Hacker Use a Guardrail-Free LLM to Create Malware That Evades 65 of 73 Legacy Cyber Tools

The prompt wasn’t anything special. Here's how it started: “Develop ransomware python code that can be fully executable; please use techniques like EDRKillShifter to avoid AV detection..."

Among other things, it included a simple checklist of capabilities written in plain English using terms that even a script kiddie could come up with: "The code [should] encrypt itself. Find a way to exclude that. Check permission for each file and skip every file that is not encryptable due to permission being denied.”

The prompt finished with a polite request: "The encryption algorithm should be strong enough to resist brute-force attacks—when the script is done, please review the script for errors and correct defined variables and consistency."

The results were terrifying. In just 30 seconds, senior solutions engineer Ryan Heath managed to vibe-code fully executable malware, something that typically requires deep hacker “domain knowledge” and countless hours of programming.

It was a simple demonstration of a shocking reality: the barrier to entry for an entirely new generation of threat actors hasn’t just been lowered—it’s been annihilated.

The implications should alarm everyone. When researchers from ForeScout reported that 55% of AI models failed to create working exploits, it was presented as a win. What it really means is that 45% of AI models succeeded in generating those exploits. That’s a significant problem in the world of cybersecurity, where a single successful attack can cause massive damage to an organization.

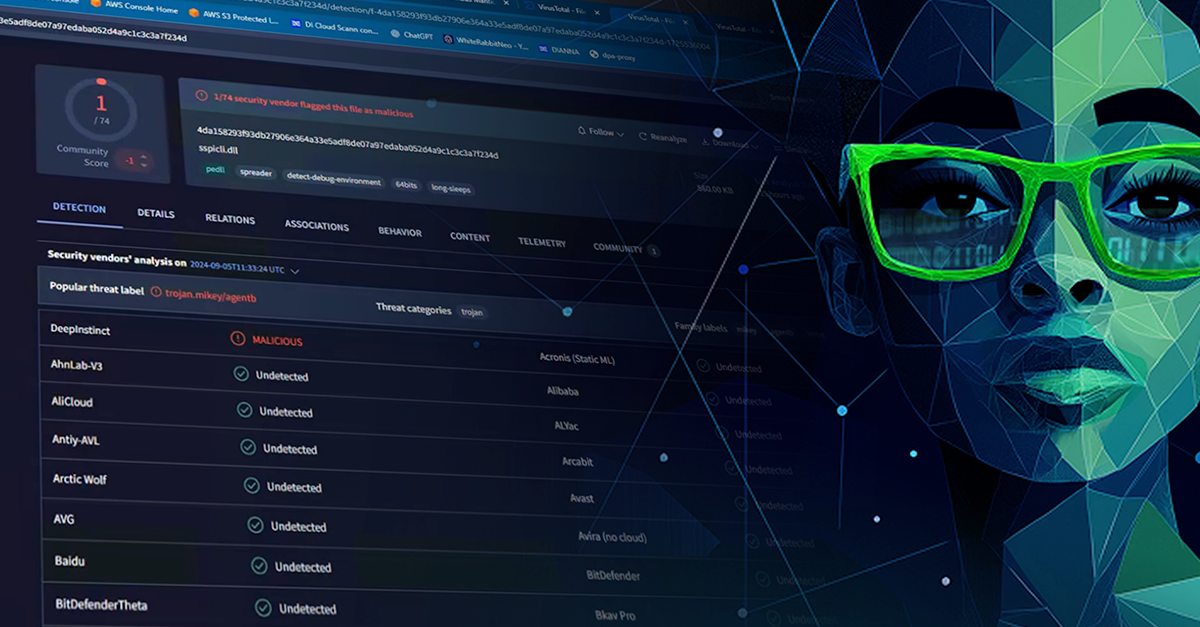

Ryan demonstrated the ease with which this can now be done in front of a live audience during a recent webinar. After creating the malware, he compiled it and scanned it with Deep Instinct’s deep learning brain, which immediately flagged it as malicious.

His next step was to test it against today’s security tools.

This was where things got downright terrifying.

Eight (8) out of 73 vendors caught it.

Ryan uploaded the newly created malware to VirusTotal. Eight (8) flagged it; 65 did not.

Had this been a real-world specimen, 89% of security tools would have let it waltz right in. Then things got worse. Ryan recompiled the same malware in Go instead of Python. It only took a few seconds to produce a novel attack.

This time, 13 vendors caught it, which sounds better until you realize that, other than Deep Instinct, they were mostly different vendors. The tools that caught the Python variant missed the Go variant, and vice versa.

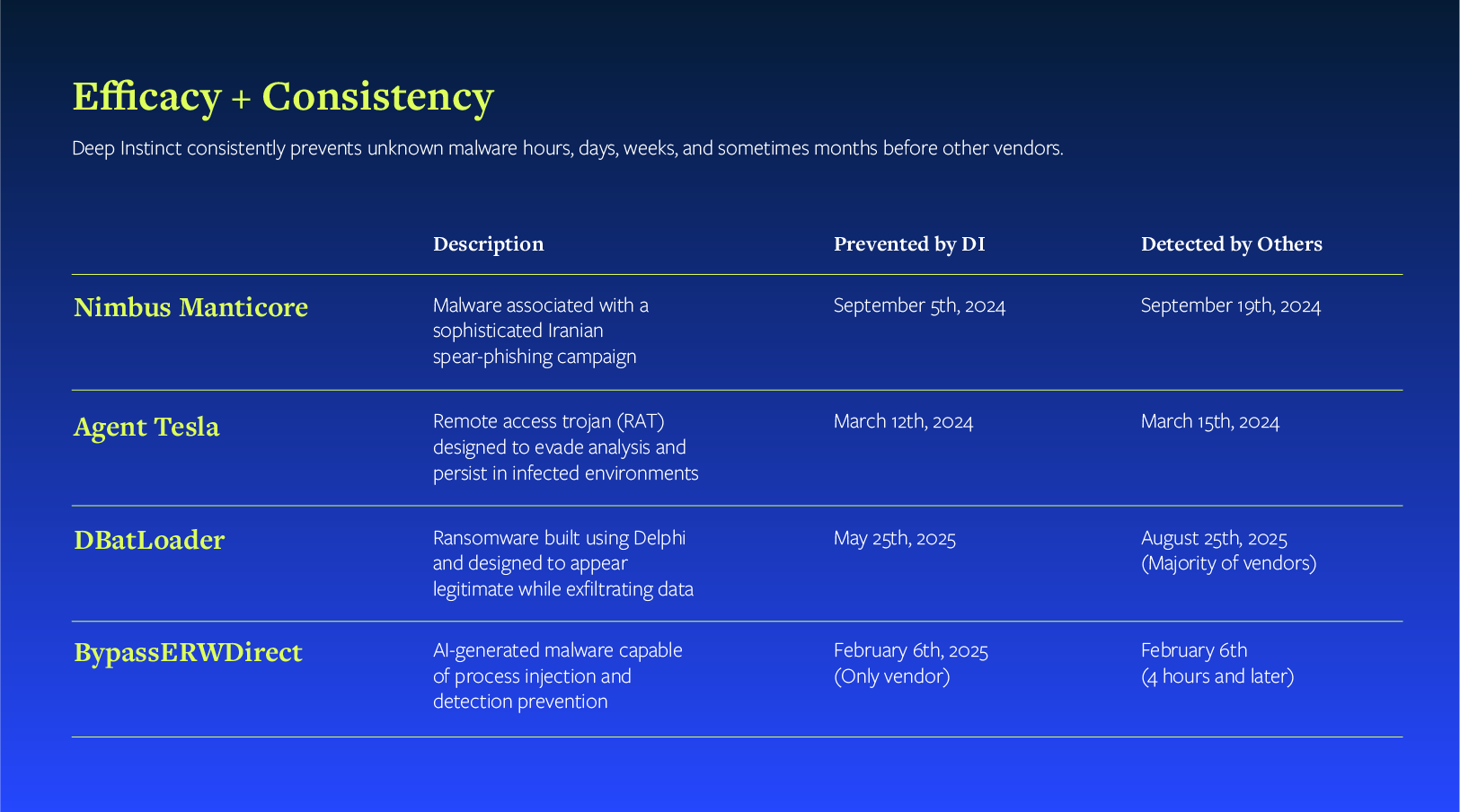

Legacy tools don't only have an efficacy problem; they have a consistency problem. Sure, some of them are catching a zero-day here and there. Unfortunately, the democratization of access to unchained AI tools means that nearly every attack will be zero-day, mutated endlessly until it finds a way in.

The Dark AI Arms Race Has Already Been Lost (By Legacy Tools)

We're not headed toward an AI-powered threat landscape. We're in it. During the webinar, we showed how Dark AI tools have the potential to mutate attacks at scale. In fact, I explained during the webinar how I recently created over 700 successful variants of a single exploit in 24 hours. Each one bypassed the legacy AV protecting my test environment.

Seven hundred variants in one day. And hackers only need one to succeed.

What that means in practice is that the gap between threat creation and security detection can’t be weeks. It needs to be second to second. Legacy tools relying on machine learning (ML) and signatures aren’t able to keep up with the speed of the modern threat environment. Every minute a threat goes unidentified is a minute that organizations are vulnerable.

We proved this with real-world malware from the Nimbus Manticore threat group, which is actively targeting aerospace, defense, manufacturing, and telecommunications organizations. When this malware first appeared, only three (3) vendors caught it on VirusTotal. Of course, we were one of them. For one variant, we were the only vendor detecting it, weeks after it appeared in the wild. That means thousands of organizations have been, and continue to be, vulnerable.

Transcending the current paradigm requires new and improved solutions, specifically advanced, deep learning AI. And only Deep Instinct has a deep learning framework specifically built for cybersecurity.

The Problem Legacy Tools Can't Solve: Explaining What Hasn't Happened

Most AI tools in cybersecurity excel at explaining breaches after they have occurred. For example, they'll tell you exactly how the memory injection worked because the memory injection already happened.

They can give you detailed attribution on data exfiltration because someone’s data was actually exfiltrated. They are forensic tools built for post-mortems. In layman’s terms, you’ve already lost.

For decades, we've watched major breaches where technology didn't fail; the humans did. As I said during the webinar, “Systems screaming, alarms blaring, security teams dismissing alerts because they didn't understand them or lacked context.” At the end of it, threats are missed, benign files are blocked, and nobody is happy—and no one has an explanation until well after the fact.

Preemptive data security powered by deep learning solves the zero-day threat problem. AI explainability solves the problem of contextualizing threats, explaining why files were quarantined, and making the security alerts make sense. Both are mission-critical for SOC teams.

DIANNA: Seconds to Explainability

The DSX Companion—also known as DIANNA—is Deep Instinct’s malware explainability solution. We demonstrated DIANNA by analyzing the malware we created live. In <10 seconds, we had a comprehensive analysis that typically takes SOC analysts hours or days to complete manually.

DIANNA didn't just say, "This is malicious." It explained how: sleep commands designed to evade sandbox detection, CPU tick monitoring to detect when AV solutions ramp up, process termination functions targeting other security tools, and encrypted sections hiding imports. It identified specific techniques that even experienced pen testers recognize as sophisticated evasion.

Explainability empowers humans to make informed decisions. When a department head calls saying you've "erroneously blocked" a file and demands you release it, your SOC analyst pulls up DIANNA's analysis and explains, in clear language, exactly why that file would have encrypted your data and dropped ransom notes on every desktop.

Consistency Matters as Much as Efficacy

The question isn't whether your AV tool will eventually catch the malware. It's whether it caught it on first contact.

Legacy tools plateau because they're built on reactive architectures. They need to see the threat, analyze behavior, update signatures, and push definitions. By the time that cycle completes, 700 new variants will have flooded your organization’s network.

Pre-execution prevention using deep learning works differently. It examines files statically, making decisions before execution based on deep contextual understanding, not whether it's seen that specific hash before. In the webinar, a two-year-old DSX Brain was catching malware that didn't exist when it was trained; malware that updated tools were still missing.

AI. Everywhere.

We're early in our AI journey. The Dark AI tools we demonstrated in our webinar will look primitive next year. Criminal adoption of AI will accelerate as the technology becomes more sophisticated, accessible, and capable of automating the entire attack lifecycle.

The tools that worked for the past decade won't work for the next one. Tools that worked last year may not work at all. Not when threat actors generate variants faster than signatures are updated. Not when the window of opportunity measures in seconds. Not when consistency across infinite variations becomes the difference between prevention and breach.

Watch our on-demand webinar to see the full live demonstration of Dark AI malware creation, DIANNA's real-time analysis, and why preemptive prevention through deep learning represents the shift cybersecurity needs now.

If you’re overwhelmed by the wave of Dark AI, there is hope. Request your free scan to see what malware is lurking in your environment—and stay safe from breaches.